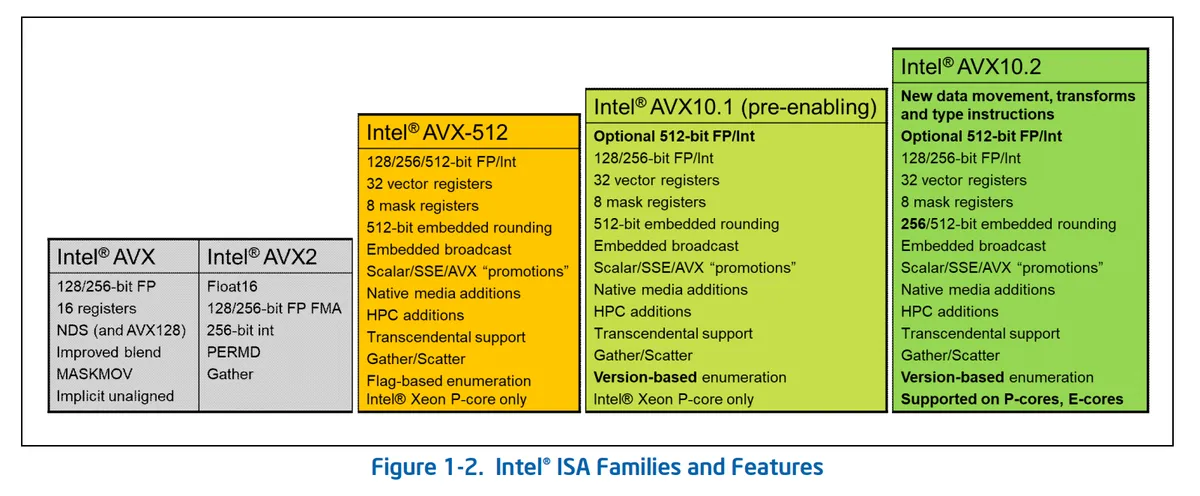

The converged AVX10 ISA will include "AVX-512 vector instructions with an AVX512VL feature flag, a maximum vector register length of 256 bits, as well as eight 32-bit mask registers and new versions of 256-bit instructions supporting embedded rounding," and this version will run on both p-cores and e-cores.

However, the e-cores will be limited to the converged AVX10's maximum 256-bit vector length, while P-cores can use 512-bit vectors. This feels akin to Arm's support for variable vector widths with SVE.

Chips arriving after Granite Rapids will support AVX10.2, which adds support for the converged 256-bit vector lengths and other new features, like new AI data types and conversions, data movement optimizations, and standards support. All future Xeon processors will continue fully supporting all AVX-512 instructions to ensure that legacy apps function normally. Intel will freeze the AVX-512 ISA when AVX10 debuts, and all future use of AVX-512 instructions will occur through the AVX10 ISA. To address developer feedback (obviously negative), Intel also plans to significantly simplify its AVX10 enumeration methods

Intel also announced the new APX (Advanced Performance Extensions) today (not to be confused with the old-school iAPX 432). Intel claims APX-compiled code contains 10% fewer loads and 20% fewer stores than the same code compiled for an Intel 64 baseline. Intel also says that register accesses are both faster and consume significantly less dynamic power than complex load and store operations. Interestingly, the new APX finds a new use for the 128B area that was left unused when Intel abandoned MPX back in 2019, and repurposes it for XSAVE.

Intel claims it has implemented APX in such a way that it will not impact the silicon area or power consumption of the CPU core. Here are APX's top-level features:

- 16 additional general-purpose registers (GPRs) R16–R31, also referred to as Extended GPRs (EGPRs) in this document

- Three-operand instruction formats with a new data destination (NDD) register for many integer instructions

- Conditional ISA improvements: New conditional load, store and compare instructions, combined with an option for the compiler to suppress the status flags writes of common instructions

- Optimized register state save/restore operations

- A new 64-bit absolute direct jump instruction

For anyone confused: AVX10.2 is code-compatible with AVX512, but not binary-compatible. Meaning developers will have to recompile AVX512 code to run on AVX10.2 chips.

I have mixed feelings about it. On the one hand, it's a good that Intel has found how to support 512-bit vectors. On the other hand, AVX10 is just a way to bail out the Alder Lake chimera. Why would any other CPU vendor want to adopt this ISA extension? AMD already have AVX512 up and running without any tricks. No one except Intel is interested as solving Intel's problems. The AVX10.2 lacks any innovations others would like to adopt.

Let's look at variable vector width comparison from Tomshardware, for example. In ARM vector can vary between 128 and 2048 bits. In Intel AVX10 512-bit vector won't crush on core with 256-bit FPU, nothing more. We have no promise that in 10 years 1024-bit AVX1024 vector won't crush on 512-FPU. And so we'll have to reinvent the wheel for another time, again.

APX arguably deserves a more positive feedback. The claim about "no additional silicon area or power consumption" is particularly interesting. Up to 10% fewer loads and 20% fewer stores merely at a cost of zero transistors and one compiler flag? Sounds almost like a magic. I didn't get a clear picture on how it will (or will not?) overlap with all existing AVX stuff we already have even after reading Intel's paper, though. So have no estimates on how really useful this ISA will be.

What do you guys think?

References

1. Intel's New AVX10 Brings AVX-512 Capabilities to E-Cores

https://www.tomshardware.com/news/intel ... to-e-cores

2. Intel Unveils AVX10 and APX Instruction Sets: Unifying AVX-512 For Hybrid Architectures

https://www.anandtech.com/show/18975/in ... hitectures

3. Introducing Intel® Advanced Performance Extensions (Intel® APX)

https://www.intel.com/content/www/us/en ... s-apx.html